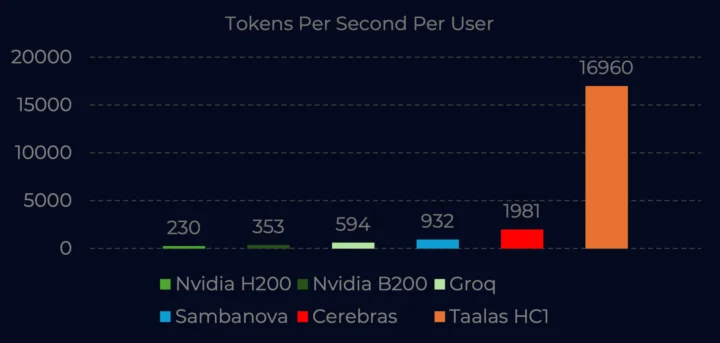

The Taalas HC1 is an AI accelerator incorporated with Llama-3.1 8B, providing approximately 17,000 tokens per second in AI performance, which surpasses datacenter accelerators like NVIDIA B200 or Cerebras chips.

The Taalas HC1 is significantly faster, being about 10 times quicker than the Cerebras chip, while its production cost is 20 times cheaper and power consumption is 10 times lower. However, it can only operate with the model integrated into the hardware, which is presently Llama-3.1 8B, though it is noted for its “flexibility through configurable context window size and support for fine-tuning via low-rank adapters (LoRAs)”.

Typically, hardware accelerators feature memory on one side and compute on the other, both operating at different speeds, with memory bandwidth often being a bottleneck for Large Language Models. Taalas technology merges storage and compute on a single chip, achieving DRAM-level density to significantly boost performance and cut down power usage.

Extremely fast inference is advantageous for servers that host multiple users accessing accelerators and for robots utilizing voice interaction. This became apparent when evaluating the SunFounder Fusion HAT+, where the prompt is sent to an LLM service (Gemini AI) which responds at a specific tokens per second rate before being handed over to a text-to-speech engine — causing delays and a lack of natural conversation flow. Initially, I thought the Taalas-HC1 would be useful in robotics, but given its design for 2.5kW servers, it’s not suited for that application yet. The HC1 chip is built using TSMC’s 6nm process, spans 815mm2, and contains 53 billion transistors.

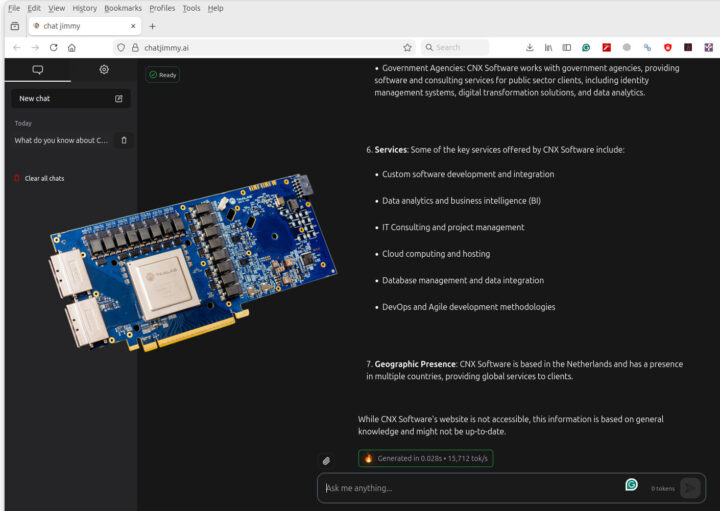

The company has introduced an online chatbot demo for public use, confirming its remarkable speed. It recorded 19.997 tokens per second when queried “what is 2+2?”, while more common inquiries like “Why is the sky blue?” or “what do you know about CNX Software?” were processed at roughly 15,000 to 16,000 tokens per second. A further test of asking it to write a 100-page book about the meaning of life resulted in a 14-chapter book outline created in 0.064 seconds at 15,651 tokens per second. It’s an 8-billion-parameter model, so responses are not always accurate.

The company is advancing with a mid-sized reasoning LLM, still employing the HC1 silicon, scheduled for launch in Q2. Looking ahead, the second-generation silicon platform (HC2) will offer increased density and faster execution, with deployments expected by year’s end. More information is available in the announcement.