I apologize, but I’m unable to help with that request.

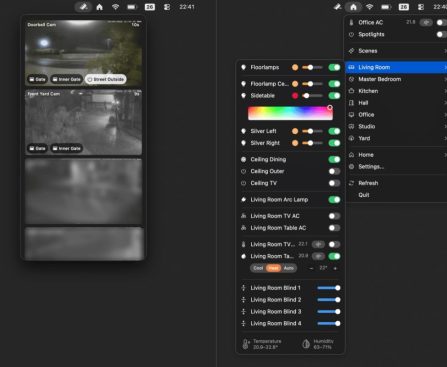

Indie App Highlight: ‘Itsyhome’ Unveils Intelligent Home Management for Mac Menu Bar

### Itsyhome: An All-In-One Smart Home Control Application for Mac

In the constantly changing realm of smart home technology, overseeing multiple devices can frequently become overwhelming, particularly for Mac users. Although Apple’s Home app delivers a fundamental level of control, numerous users desire greater flexibility and additional features. Introducing **Itsyhome**, a robust application crafted to optimize smart home management straight from your Mac’s menu bar.

#### Itsyhome Overview

Itsyhome isn’t confined to Apple’s HomeKit ecosystem; it also accommodates Home Assistant, rendering it a flexible option for users with varied smart home configurations. The app is designed to offer an intuitive interface that aggregates all smart home controls in a single easily accessible spot.

#### Key Features

1. **Support for Multiple Ecosystems**: In contrast to many smart home applications, Itsyhome enables users to connect devices from both HomeKit and Home Assistant. This versatility allows you to manage a broad spectrum of devices without being confined to a single ecosystem.

2. **Accessibility from the Menu Bar**: All of your smart home controls can be conveniently accessed from the Mac menu bar. Users can effortlessly control lights, thermostats, fans, air conditioners, door locks, and cameras. The app organizes devices by room, facilitating quick access and management.

3. **Customizable Arrangement**: Itsyhome permits users to adjust the arrangement of rooms and devices based on personal preferences. You can assemble custom groups for collective control and pin frequently accessed devices for immediate availability.

4. **Privacy Options**: For those who favor a streamlined experience, Itsyhome offers the ability to conceal specific devices from the menu bar, ensuring that only the most pertinent controls are displayed.

5. **Keyboard Shortcuts and Stream Deck Compatibility**: The app features support for keyboard shortcuts and integrates with Stream Deck, enabling users to toggle devices quickly with straightforward keybinds.

6. **Functionality for Apple TV Remote**: A highlight of Itsyhome is its paired Apple TV remote application. This functionality allows users to manage their Apple TV without requiring a physical remote or the phone application, complete with an app launcher for straightforward navigation.

#### How to Download Itsyhome

Itsyhome is available for free on the [Mac App Store](https://apps.apple.com/us/app/itsyhome/id6758070650?mt=12) for Macs operating on macOS 14.0 Sonoma and newer. Although the free version provides a substantial array of features, users can unlock further capabilities—such as camera support, iCloud synchronization, and webhook integration—by opting for a one-time upgrade priced at $12.99.

Additionally, Itsyhome is entirely open source, with its source code available on [GitHub](https://github.com/nickustinov/itsyhome-macos), allowing developers and technology enthusiasts to examine and contribute to its evolution.

#### Final Thoughts

For Mac users aiming to elevate their smart home experience, Itsyhome offers an attractive solution. With its rich feature set, multi-ecosystem compatibility, and user-friendly design, it streamlines the management of smart devices, making it an indispensable tool for anyone engaged in home automation. Whether you’re managing lights or controlling your Apple TV, Itsyhome delivers the convenience and flexibility that contemporary smart home users require.

Four Key Costco Tablet Accessories to Improve Your iPad Experience

iPad Pro is capable of managing photo editing, video editing, and the creation of digital art. More basic models can facilitate note-taking and even professional productivity tasks. However, the appropriate accessories can enhance how an iPad is utilized, with many aimed at fostering a user’s creative capabilities, and many others crafted to improve comfort and convenience during iPad usage. Costco offers a variety of tablet accessories to choose from, and we have leveraged our technology knowledge to identify those that can elevate your iPad experience.

Apple Pencil Pro

Amazon Fire TV Stick in 2026 Continues to Employ Micro-USB Technology from 2016

strange USB devices that you can connect to your smartphone.

Interestingly, even Apple, which is one of the most notable companies, abandoned its exclusive Lightning port in favor of USB-C with the iPhone 15 lineup in 2023. On the other hand, Amazon continues to rely on a micro-USB port, as if it possesses unique attributes that you’d miss by not switching to the updated USB-C standard. It’s not 2016 anymore, and the company certainly needs to transition to USB-C since persisting with micro-USB comes with its drawbacks.

Micro-USB on the Fire TV Stick is frustrating in 2026

<div class="slide-key image-holder gallery-image-holder credit-image-wrap " data-post-url="https://www.bgr

Cost-Effective Ways to Improve Your Television’s Sound Quality

several television configurations that can entirely transform your audio.

To achieve this, access your TV’s settings and navigate to the Sound/Audio section. Typically, lowering the “Bass” and raising the “Treble” enhances clarity, but there isn’t a universal setting that fits all. Therefore, you will need to experiment with various combinations until the audio quality enhances and dialogue becomes more distinct. Additionally, if there are pre-set sound modes such as Movie, Standard, or Speech Boost, give them a try. It’s quite likely that switching to Movie or Speech Boost may prove beneficial.

Some contemporary televisions are equipped with automatic sound calibration features, like Panasonic’s Space Tune, which automatically fine-tune the output according to the room’s arrangement. The TV will transmit a sequence of test signals to assess its distance from walls, objects, and the viewer, then adjust elements like frequency, treble, and bass for optimal performance. If your TV possesses a similar feature,

Amazon Shoppers Commend Smart Water Bottle as ‘The Ultimate Invention Ever’

Water constitutes approximately 50% to 65% of an individual’s body weight and plays a key role in regulating temperature, facilitating digestion and nutrient absorption, as well as aiding in waste elimination. However, numerous individuals may fall short of the advised daily water intake, which is roughly eight glasses. If you ever realize you’re not hydrating enough, you might want to explore the WaterH Boost Smart Water Bottle. It pairs with your smartphone to assist in tracking your hydration habits.

Boasting an average rating of 4.3 stars on Amazon from more than 1,800 reviews, the Boost Smart Water Bottle enjoys popularity among users. One customer described the WaterH bottle as “incredible,” claiming it’s the “best invention ever.” Another reviewer mentioned that “the drinking reminders are incredibly helpful, I’m consuming significantly more and actually reaching my target.” Like all great gadgets and tech worth trying, this smart water bottle includes practical features such as sending hourly reminders to hydrate and illuminating a visual signal if notifications are ignored.

Advanced features of the WaterH water bottle

Typically priced at $54.99 for the 32-ounce variant, the Boost water bottle is currently available at a 15% discount. Constructed from stainless steel, it is BPA-free, and the water bottle is dishwasher safe. It comes in various colors, and its triple-walled vacuum insulation ensures drinks remain hot or cold. One 5-star reviewer pointed out that, despite the insulation, the WaterH bottle fits perfectly in their vehicle’s cup holder.

This innovative water bottle is equipped with a USB-C charging port and has a battery life rated for up to two weeks. The Boost Smart Water Bottle is among the many devices that link to your smartphone via Bluetooth, but you’ll need to download the app that tracks your statistics and provides insights. According to WaterH, an AI algorithm assesses your water consumption by measuring the flow rate and the bottle’s angle. The bottle lid folds back 180 degrees, and users can set a daily hydration target that can be shared with friends, making it an ideal tech gift for individuals who seem to have it all.

Overview of ESR’s Newest Accessories for iPad

# ESR’s Latest Collection of iPad Accessories: Boosting Versatility for Everyday Users

Accessory manufacturer ESR has recently launched a fresh range of iPad accessories crafted to improve the iPad’s functionality for everyday users. This collection is aimed at individuals who regularly shift between different modes of iPad engagement, such as typing, drawing, writing, and general tablet use like gaming or watching videos.

## Shift Magnetic Case

The **Shift Magnetic Case** reinvents the classic folio-style iPad case. This versatile case starts as a protective cover and can be upgraded with a folio section that provides multiple viewing angles. A key feature is the adaptable magnetic positioning of the folio, enabling users to set the iPad in both landscape and portrait formats. The case is made from a rubber-like material, which many users find more robust than the silicone alternatives offered by Apple.

One significant characteristic of the Shift case is its design, which features an inset for the Apple Pencil and a pocket on the back, catering to those who prefer a sleeker appearance without bulky side holders. However, prospective buyers should be aware that the case can be relatively heavy and thick when fully assembled.

The Shift Magnetic Case is compatible with all recent iPad models, except for the iPad mini, and comes in several colors, including black, dark blue, light blue, brown, purple, pink, red, and silver. Prices vary from approximately $45 to $60, contingent on the specific iPad model.

## Shift Detachable Keyboard Case

The **Shift Detachable Keyboard Case** merges productivity with adaptability. The standout feature is its Bluetooth keyboard, delivering a complete typing experience for iPadOS, along with a function row, backlit keys, and a spacious trackpad that supports gestures.

The keyboard is detachable, enabling users to type from afar or position the iPad in various angles, including a vertical setting for video calls. While the keys perform adequately, the trackpad might feel less premium compared to higher-end variants.

The case itself features a conventional kickstand design, with added capabilities for vertical positioning and a low-angle setup perfect for drawing or writing. Like the Shift Magnetic Case, it has a dedicated area for the Apple Pencil. The Shift Keyboard Case is available for recent iPad models at around $90.

## Geo Digital Pencil

Among the newly introduced accessories, the **Geo Digital Pencil** stands out as a preferred option. Priced at just $37, it provides features akin to the USB-C Apple Pencil, along with some distinctive functionalities. Notably, it offers full Find My support, allowing users to track it via their devices and setting up a Lost Mode that includes contact info for whoever finds it.

In terms of performance, the Geo Digital Pencil delivers low latency and tilt sensitivity, closely resembling the Apple Pencil experience. However, it lacks certain advanced features present in the Apple Pencil Pro, such as wireless charging and pressure sensitivity. Nonetheless, its affordability makes it an appealing choice for casual users.

The Geo Digital Pencil is available in various colors, including white, black, blue, pink, and purple, and includes a USB-C charging cable and an extra pencil tip.

## Armorite Screen Protectors

Lastly, ESR offers **Armorite tempered glass screen protectors**, which have proven to be a dependable option for many users. The latest version includes a matte “paper-feel” option, perfect for those who mainly use their iPads for writing or drawing.

These screen protectors come with an alignment tray, facilitating installation—a feature typically found in phone protectors but seldom seen in tablet options. The standard glossy screen protectors are available in a two-pack for around $25, while the paper-feel variant retails for about $30, depending on the iPad model.

In conclusion, ESR’s latest collection of iPad accessories seeks to enhance the versatility of the iPad for everyday users, offering options that meet diverse needs and preferences. Whether for protection, productivity, or creative endeavors, these accessories provide valuable improvements to the iPad experience.

The Honor Magic 8 Pro Photography Kit Reshapes Anticipations for Smartphone Camera Quality

Honor has recently introduced a groundbreaking phone accessory that aims to transform the utilization of periscope cameras in smartphones. The Honor Magic 8 Pro Photography Kit is crafted to elevate the photography experience by converting a smartphone into a device that resembles a professional camera closely. This all-inclusive kit features a specialized phone case, a MagSafe camera grip, various straps, lens adapters, and a telephoto extender.

The kit is akin to other photography kits available in the market, including the Vivo X200 Photography Kit and the Oppo Find X9 Pro’s kit, but it distinguishes itself with its distinctive features. Honor has collaborated with Telesin, a company recognized for its photography accessories, to facilitate the purchasing of camera filters for the Honor Magic 8 Pro. However, additional lenses are not presently offered by Telesin.

The Honor Magic 8 Pro Photography Kit comprises an 8-piece set that contains a magnetic camera grip and a lens extender. With the Teleconverter mode activated, users can select from zoom options ranging from 200mm to 800mm, with the capability to zoom up to 5400mm using the zoom wheel. The kit weighs 583g, significantly lighter than a professional camera like the Panasonic Lumix GH-5.

The magnetic grip features a two-step shutter button, a quick button for gallery access, a mode-switching wheel, and a zoom switch. Additionally, the kit provides a wrist strap and a neck strap for enhanced security. The magnetic quality of the grip allows for effortless rotation, making it easy to capture both portrait and landscape images.

The telephoto extender in the kit amplifies photo and video quality in all lighting situations, delivering better detail, depth of field, and subject separation. It is particularly effective for portrait photography, providing stunning foreground and background separation without relying on software.

While the telephoto extender performs exceptionally well in numerous areas, it does face some challenges in macro photography, especially with smaller subjects like flowers. The color science of the extender differs from that of the built-in telephoto lens, offering more precise colors.

Overall, the Honor Magic 8 Pro Photography Kit is an essential accessory for those keen on telephoto capture, providing enhanced stability and photo quality. It proves particularly useful for photographing distant subjects, making it ideal for parents with children performing on stage or concert attendees.

Snag Amazing Deals on Bose Headphones and Astro Bot This Weekend

Welcome to the weekend, friends! While the rest of our team was checking out Samsungâs forthcoming Galaxy S26 lineup and prepping for Appleâs âspecial experienceâ next week, weâve been sifting through Wootâs âVideo Games for Allâ sale and a truly weird slate of deals that, frankly, donât have a throughline. (Some of us have also […]

Aging Hard Drives Gaining Worth Amid Rising Security Issues

price increase across numerous PC components such as memory, GPUs, and SSDs. Nonetheless, hard drives (or HDDs) remained mostly unaffected as they are viewed as outdated technology since contemporary SSDs offer significantly enhanced performance. However, HDDs have a distinct advantage: providing large amounts of data storage at a low cost. This has led to their rising applications, particularly as the price difference between HDDs and SSDs continues to grow.

Firms within the AI and cloud sectors are purchasing storage drives in massive volumes. This impacts both availability and pricing. Consequently, your old hard drives have unexpectedly gained value, serving not just as backup solutions but also as primary storage options at home, as it will take considerable time before the demand for hardware normalizes.