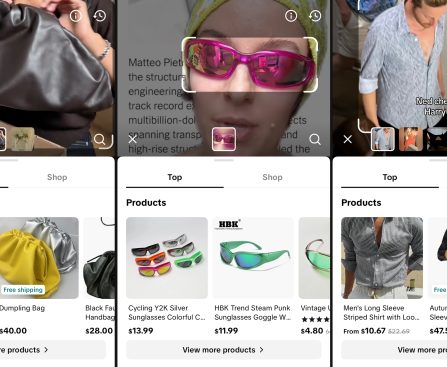

With the new iPhone models achieving remarkable sales, numerous accessory manufacturers have refreshed and launched new products aimed at enhancing the user experience with the iPhone 17 Pro. Among these are the recently unveiled Sandmarc’s innovative tetraprism lens and various other accessories, such as a revamped Game Boy-style case from Gamebaby and a 3-in-1 wireless charger capable of powering new iPhones at rates up to 25W due to Qi2.2 technology.

Although the speculation surrounding the iPhone 18 models and the forthcoming foldable iPhone has intensified, the iPhone 17 Pro continues to stand out as one of Apple’s most successful releases. It not only features a redesigned look with Apple’s modified camera plateau, but the Cosmic Orange hue has significantly contributed to the smartphone’s sales.

In addition, Apple’s latest device boasts a robust A19 Pro chip, vapor chamber technology that mitigates overheating during intensive tasks, and novel camera functionalities that position this iPhone as an excellent choice for anyone currently using an iPhone 15 or older. That being said, I have been trying out some exciting new accessories recently and think you would also appreciate them, along with additional new releases that could further enhance the iPhone 17 Pro’s capabilities.