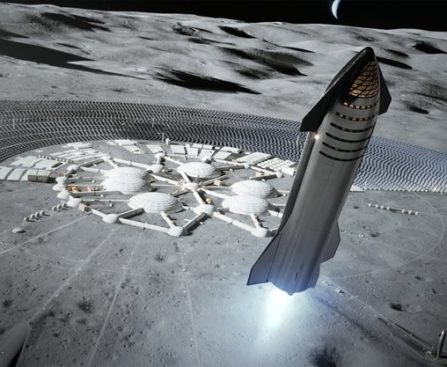

first crewed journey to the moon’s surface in over fifty years. Prior to this, two earlier Artemis missions were intended to be executed to facilitate and strategize for the forthcoming lunar landing. Artemis I was a success. Artemis II marked the first crewed flight around the moon since Apollo 17. However, it also faced delays and is now projected to launch as early as April, which would inevitably push back the final Artemis III mission as well. Consequently, NASA has now declared that Artemis III is cancelled, primarily due to those hurdles and delays in earlier missions, indicating that the U.S. return to the moon will be pushed to a later date, following gradual and “evolutionary” steps.

The administrator of NASA, Jared Isaacman, announced the news during a press briefing at Kennedy Space Center on Friday, February 27. According to NPR, Isaacman stated that the current plan “is simply not the correct route forward.” Artemis II will continue as planned, likely in April. NASA aims to take incremental measures to enhance the mission’s success, reportedly developing additional risk-free modifications to reduce the delays and issues encountered thus far. Essentially, Artemis III is still recognized as a mission and will occur; however, when it does take place, that future mission will be in low-Earth orbit, meaning no one will be landing on the moon. Currently, it is set for mid-2027. Ultimately, an Artemis IV mission is expected to enable a landing crew to set foot on the lunar surface, sometime in 2028 — if everything proceeds as planned.