Pokémon Day is always a big deal for Nintendo fans, but with this year being the franchise’s 30th anniversary, the next Pokémon Presents stream is likely to bring the heat when it begins on February 27th at 9AM ET. With Pokémon Legends: Z-A behind us and Pokémon Pokopia right around the corner, chances are high […]

Blog Posts

Blog Posts

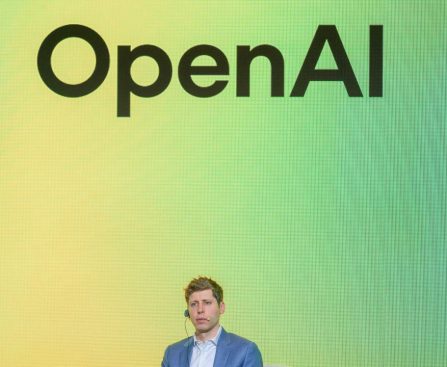

Nearly 50% of ChatGPT Usage in India Comes from 18 to 24-Year-Olds, Says OpenAI

The company said on Friday that users between 18 and 24 years of age account for nearly 50% of all messages sent by Indians to ChatGPT, and users under 30 accounted for 80% of usage in the country.

macOS 26.3 Contains Mentions of Two Additional Studio Display Variants, as Per Reports

New discoveries found in macOS 26.3 indicate several new Apple hardware products are on the horizon. In anticipation of Apple’s special “experience” on March 4, there are fresh references to two new models of the Studio Display, along with the frequently speculated low-cost MacBook featuring the A18 Pro chip.

### Are new Mac external displays on the way?

The references were discovered this week by Macworld within kernel extensions (kexts) in the public release of macOS 26.3 issued last week. These files mention three unreleased identifiers for Apple products: J700, J427, and J527.

J700, as earlier reported, is the codename for Apple’s anticipated low-cost MacBook equipped with the A18 Pro. This laptop is expected to debut next month, showcasing a display smaller than 13 inches. Bloomberg has also indicated that it will be constructed from aluminum and that Apple has experimented with various vibrant colors, such as light yellow, light green, blue, pink, classic silver, and dark gray.

However, what might be even more intriguing are the identifiers J427 and J527. The distinctions between these two models remain uncertain, but Macworld speculates they could either represent a larger screen size variant or a more budget-friendly version with fewer features.

Nonetheless, this isn’t the first instance revealing these two model identifiers. Bloomberg referred to these model numbers back in March. Here are the two scenarios Mark Gurman presented at that time:

> Apple is creating both and will select one for launch, or it’s a second model with an alternative screen size or specification set. After all, the Pro Display XDR is becoming a bit outdated too, so replacing that model could be logical.

Currently, Apple offers two external displays, including the Studio Display (starting at $1599) and the Pro Display XDR (starting at $4999). The Studio Display debuted in 2022 alongside the Mac Studio, while the Pro Display XDR was introduced in 2019 with the Mac Pro.

The inclusion of both external displays in macOS 26.3 suggests they are separate products still under development. Whether Apple intends to unveil both remains uncertain. We should receive more information shortly, with Apple expected to announce several new products during the week of March 2.

China Enforces Prohibition on Extendable Car Door Handles for Safety Concerns

Bloomberg disclosed these issues in September 2025.

In the case of a crash or battery failure, door handles that sit flush with a vehicle may fail, complicating access for emergency services trying to open a car door when necessary. Consequently, vehicle manufacturers in China will be prohibited from using recessed or flush door handles in their vehicles, and automakers must adhere to additional regulations regarding these mechanisms. Although the new laws also pertain to gas-powered vehicles, electric vehicles are anticipated to be more significantly affected by the new rules.

As China stands as the largest EV market globally, these new regulations could potentially have a worldwide impact, requiring manufacturers engaged with different countries to determine whether to comply solely with China’s standards in that region or apply the modifications across all their released vehicles.

China enacts new rules on flush door handles

Tesla’s More Affordable $60,000 Cybertruck Remains a Cybertruck

Tesla has announced a new all-wheel drive Cybertruck that starts at $59,990, the cheapest the controversial truck has been sold for yet – though still well above the $40,000 price tag Elon Musk had initially promised. It’s been joined by a $15,000 price cut for the high-end Cyberbeast variant, as Tesla doubles down on its […]

Meta Will Sabotage Its Smart Glasses by Being Itself

Whenever I write about Meta’s Ray-Ban smart glasses, I already know the comments I’m going to get. Cool hardware, but hard pass on anything Meta makes; will wait for someone else to come along. It’s hard to imagine that sentiment changing anytime soon after The New York Times reported that Meta mulled launching facial recognition […]

G42 of UAE Partners with Cerebras to Deploy 8 Exaflops Computing Power in India

Abu Dhabi-based tech company G42 has partnered with U.S.-based chipmaker Cerebras to deploy eight exaflops of compute through a new system in India

The OpenAI Alumni Network: 18 Startups Founded by Former Team Members

The OpenAI mafia: 15 of the most notable startups founded by alumni

Five Creative Methods to Reuse Your Old Amazon Fire TV Stick

Streaming sticks, due to their affordable pricing, often feel like disposable items, similar to an old mouse or keyboard that you replace without second thoughts.

Interestingly, an aging Amazon Fire TV Stick can still prove to be a useful device, even if its speed has diminished over time. Naturally, it may not serve as your main streaming device, but it can address various issues around the household, assist you during travels, or even find a role in a small business environment.

The advantageous aspect is that repurposing a used Amazon Fire TV Stick requires minimal time, effort, or financial investment. There’s no need to modify the hardware or purchase additional accessories. Here are straightforward ways to repurpose the streaming stick, save some money, and reduce e-waste simultaneously.

Utilize it as a travel companion

Samsung Unveils One UI 8.5 Beta 5 Before Galaxy S26 Debut

Final tweaks before we head out.