**Meta’s Pivotal Court Case: Consequences for Social Media and Child Protection**

This week, Meta’s CEO Mark Zuckerberg provided testimony in a pivotal court case that investigates the effects of social media on mental health, especially concerning children and adolescents. Initiated by New Mexico, the trial alleges that Meta has neglected to safeguard younger users on platforms such as Facebook and Instagram from online threats. The lawsuit contends that Meta’s offerings have enabled the exploitation of minors and contributed to grave issues like human trafficking.

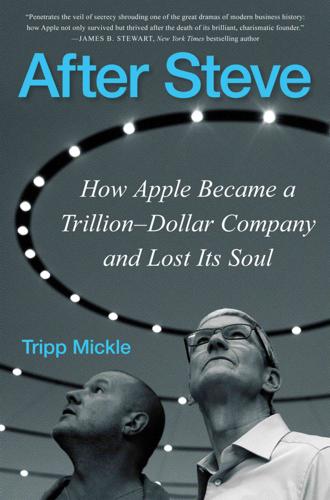

Zuckerberg’s statements disclosed that he had contacted Apple CEO Tim Cook to address the welfare of young users. This communication, which traces back to February 2018, highlights the persistent discord between the two technology leaders, mainly regarding their strategies concerning user safety and privacy. Although the specifics of their discussion remain private, it emphasizes the rising apprehension among tech executives about the effects their platforms have on at-risk groups.

The accusations leveled against Meta are serious. The lawsuit claims the company has designed a “hazardous product” that not only targets children but also facilitates their exploitation in both digital and physical realms. This legal action is part of a larger pattern of lawsuits facing Meta, reminiscent of the legal battles against tobacco companies during the 1990s, which aimed to hold them accountable for the detrimental effects of their products.

During the trial, defense lawyers probed Zuckerberg about his exchanges with Cook, highlighting the necessity of addressing the mental health epidemic linked to social media use. Zuckerberg conveyed his worries for the welfare of adolescents and children utilizing Meta’s platforms, indicating a readiness to partner with Apple on safety initiatives.

The dynamic between Meta and Apple has been fraught, particularly following privacy controversies surrounding Facebook. Cook has openly criticized Facebook’s management of user data, which has exacerbated their relationship. Notably, prior communications have shown Zuckerberg proposing that Meta should limit research into the negative impacts of its platforms, referencing Apple as a model for reducing such investigations.

A central disagreement between the two corporations is their distinct perspectives on age verification for application users. Meta asserts that the duty for age verification should rest with platform providers like Apple and Google, while Apple maintains that developers should assume responsibility for ensuring user safety on an application-by-application basis.

As this significant trial progresses, its results may have extensive repercussions for Meta, the social media sector, and the ongoing dialogue about online child safety. The case not only prompts inquiries about corporate accountability but also underscores the urgent necessity for effective strategies to shield young users from the potential hazards of social media.