Sai Vsr

A standard GPU fundamentally serves as a visual problem solver. It was designed to render frames rapidly, manage textures and lighting, and ensure that games appear fluid. The same parallel computations that enable these visuals are also exceptionally effective for processing large quantities of data simultaneously. This is the reason why high-performance graphics cards and general-purpose data center GPUs have been utilized for substantial compute tasks. For an extended period, this was sufficient power to advance new concepts.

The drawback is that a consumer or general-purpose compute GPU still includes a significant amount of hardware logic dedicated solely to graphics. Its memory configuration is optimized for sending pixels to a display rather than constantly transferring large sets of numbers. While running sophisticated workloads on a GPU is certainly feasible, when the data volume increases and multiple cards collaborate, the communication delays begin to hamper performance. You find yourself squandering energy and time merely waiting for chips to align.

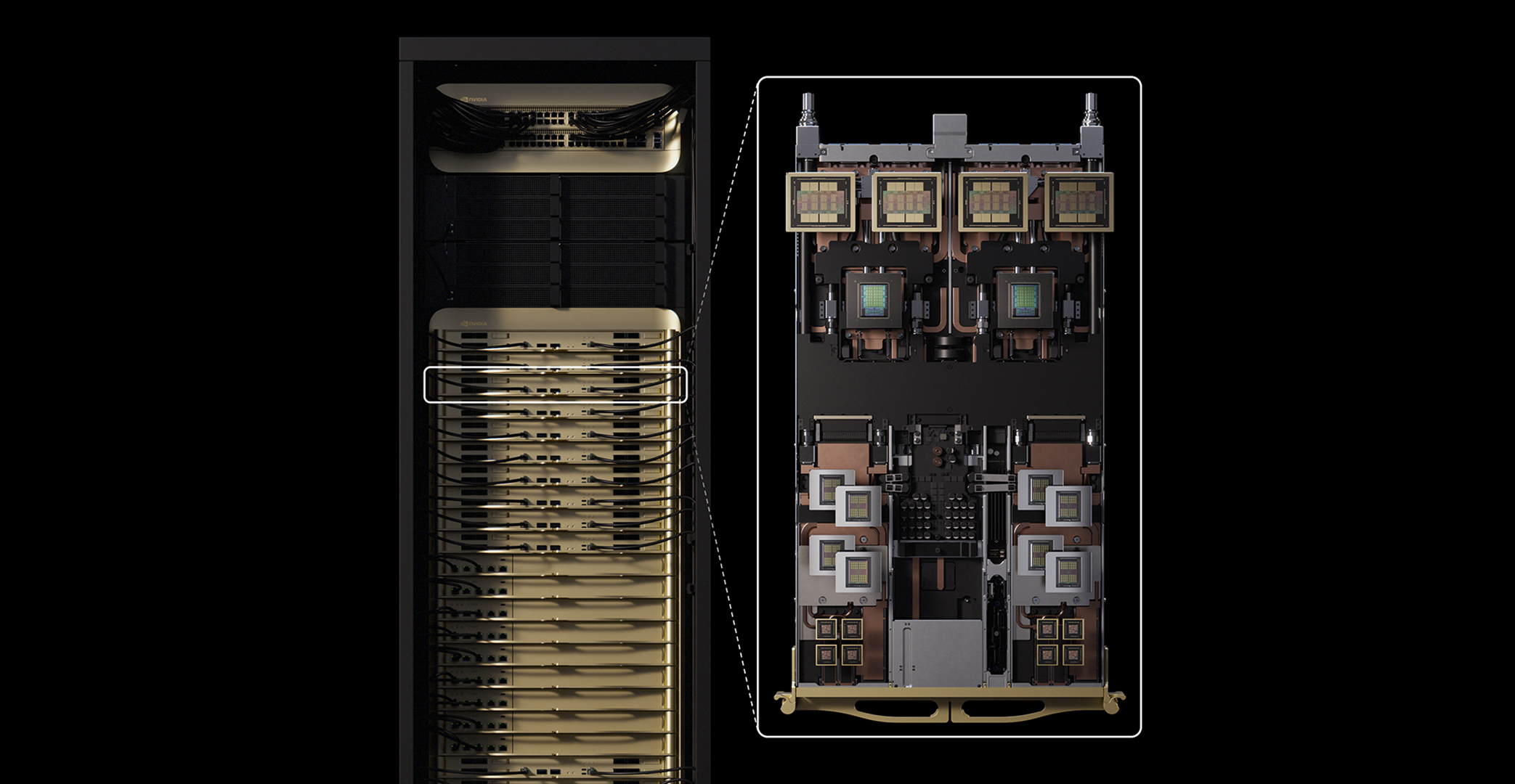

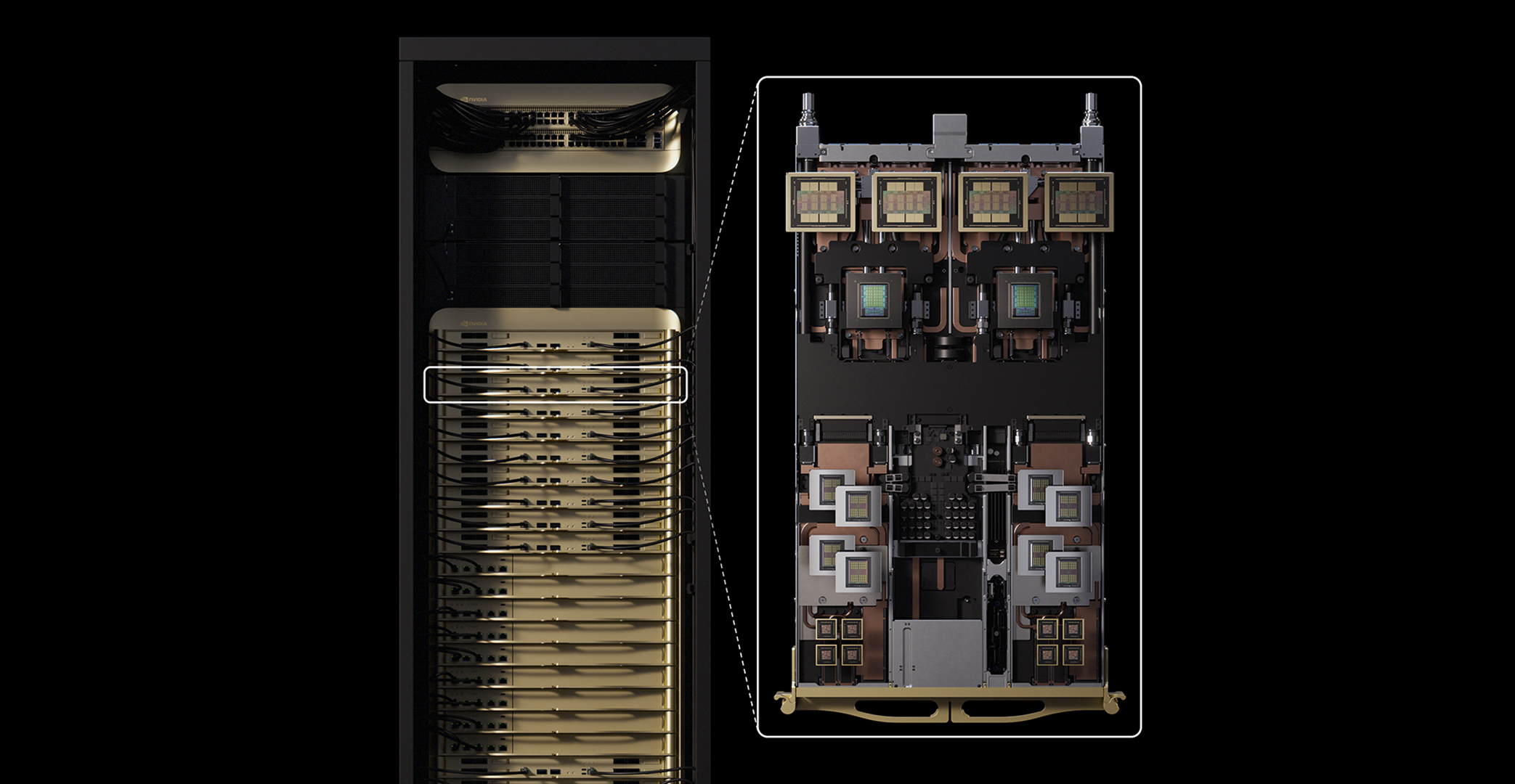

At this point, if you’re working with smaller models or generating quick predictions, a typical GPU can still feel responsive, but as soon as you scale up or initiate training across numerous machines, those graphics-oriented design preferences become burdensome. This realization prompted NVIDIA to construct accelerators that target compute tasks exclusively. They eliminate the overhead related to screen management, enhance memory bandwidth, and are engineered to enable multiple chips to work in harmony without frequently interfering with each other.

<